Not Everyone Needs to Know Everything

The Modern Paradox: Transparency vs Decision Utility

In a world where “radical transparency” is often championed as the gold standard, there’s a silent cost we don’t talk about enough:

Decisions don’t usually collapse under secrecy, but they can decay under noise.

As decision-makers, we’re not suffering from a lack of access. We’re burdened by an abundance of unfiltered, unranked, and often unnecessary information.

Transparency has become the default, but without intentional design, it can start to erode in signal, focus, and clarity.

Why Algorithms Don't Over-Explain

Here’s a counterintuitive thought

Machines are better decision architects than most humans, because they are ruthless about what not to share.

In machine learning systems

Features that don’t improve predictive accuracy are dropped.

Attention mechanisms prioritize context over completeness.

Black-box models, ironically, outperform transparent but weak ones.

This doesn't mean algorithms are "better" it means they’re optimized for outcomes, not optics.

They aren't worried about how transparent they look, but how effective they are.

The Curse of Context

In human systems, especially organizations, we often fall into a trap

“If in doubt, share everything.”

But the reality is that context isn’t cumulative, it’s decaying.

As we move further from the original decision point:

Information loses resolution

Relevance gets diluted

Stakeholders lose the ability to act on what they now merely understand

What begins as inclusive becomes intrusive. What was meant to clarify ends up overwhelming.

Reframing the Ideal: Lean Information Architecture

Just like lean manufacturing trims waste, and lean data trims volume, lean information trims irrelevance.

This is not about withholding.

It’s about designing information with intention, guided by the principle:

Information exists to drive decisions, not decorate decks.

Key Pillars of Lean Information Sharing

a. Signal over Stream: Use threshold-based sharing. Not every metric is a dashboard.

b. Role-Relevance Mapping: Align what’s shared to who needs it, not to who could see it.

c. Decision-Centric Design: Tailor the format and detail based on decision type-strategic, operational, or experimental.

A Note on Big Data vs Lean Data

The early 2010s heralded Big Data: collect everything, store everything, analyse later.

But we now know, more isn’t always better.

Lean Data emerged from this clutter, especially in development and social impact contexts, to focus on:

Smaller datasets

Faster feedback

More actionable insights

In decision design, this is the shift we need too. Not more transparency. More design.

Decision Hygiene

Think of it like this:

Too little information = error

Too much information = noise

The right information = clarity

This is where decision hygiene matters, curating cognitive environments where choices are not just visible, but viable.

How Do You Know What the “Right” Information Is?

Let’s turn philosophy into practice. Because relevance is not a gut feel, it’s a designed outcome.

Framework to use to move toward leaner, more relevant information environments:

a. Anchor to the Decision, Not the Report

Start by asking: What decision needs to be made and by whom?

Every piece of information should answer either:

What action should we take?

What risk should we prepare for?

What trade-off are we navigating?

If it doesn’t support a decision—it doesn’t belong.

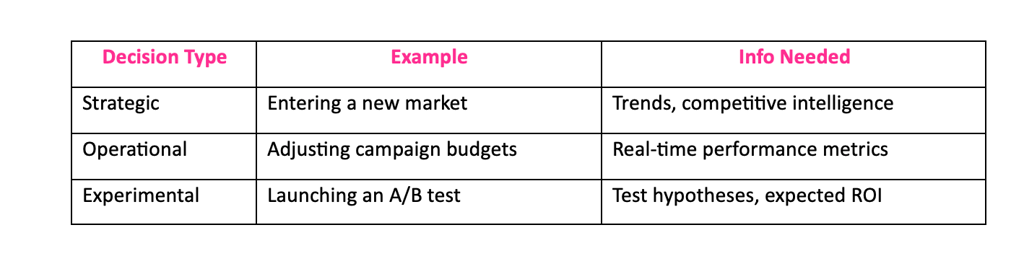

b. Classify Decisions by Type

Information requirements differ by decision type

Tailor not just what you share, but also when and how.

c. Use the 3R Filter: Relevance, Recency, Resolution

Before sharing any input, ask:

Relevance: Does this change what we’d decide?

Recency: Is this up-to-date enough to be trusted?

Resolution: Is the detail appropriate for this level of decision?

If the answer is no to any of these—hold it back, or reframe it.

How Do Algorithms Decide What’s Relevant?

This is where the real-world analogy to human decision design gets fascinating.

1. In Big Data:

Relevance = Signal Over Noise

Algorithms are trained to:

Prioritize high-signal inputs (e.g., click behaviour over idle scrolling)

Use probability models to predict what matters (Bayesian filters, decision trees)

For example, YouTube’s recommendation algorithm:

Doesn’t show you everything trending

It shows what’s trending for someone like you, right now

2. In Deep Learning: Attention Mechanisms

In large language models like me, transformers use something called attention scores:

They assign dynamic weights to each input word or concept

The “right” information becomes the stuff with the highest attention probability in context

It’s not unlike your brain asking:

“What should I focus on in this moment to decide?”

That’s algorithmic lean thinking.

What Can Humans Learn From This?

Start with the Decision Prompt

Before asking “What do I need to know?” ask: “What decision do I need to make?”Weight Inputs Dynamically

Not all data is equal. Context and timing change value.

(Algorithms adjust weights constantly—humans should too.)Don’t Confuse Transparency with Clarity

Algorithms skip irrelevant data by design.

Humans often hoard it out of fear.

Learn to trust omission as a form of wisdom.

The Overpromise of Transparency

Too much transparency can hurt decision-making.

In Conclusion

Transparency was never meant to be a firehose.

It's time we move from information sharing to decision shaping.

Let algorithms inspire us not in their logic, but in their discipline

Share only what improves outcomes. Not what satisfies optics.

Because in decision-making, less isn’t more but less by design, absolutely is!